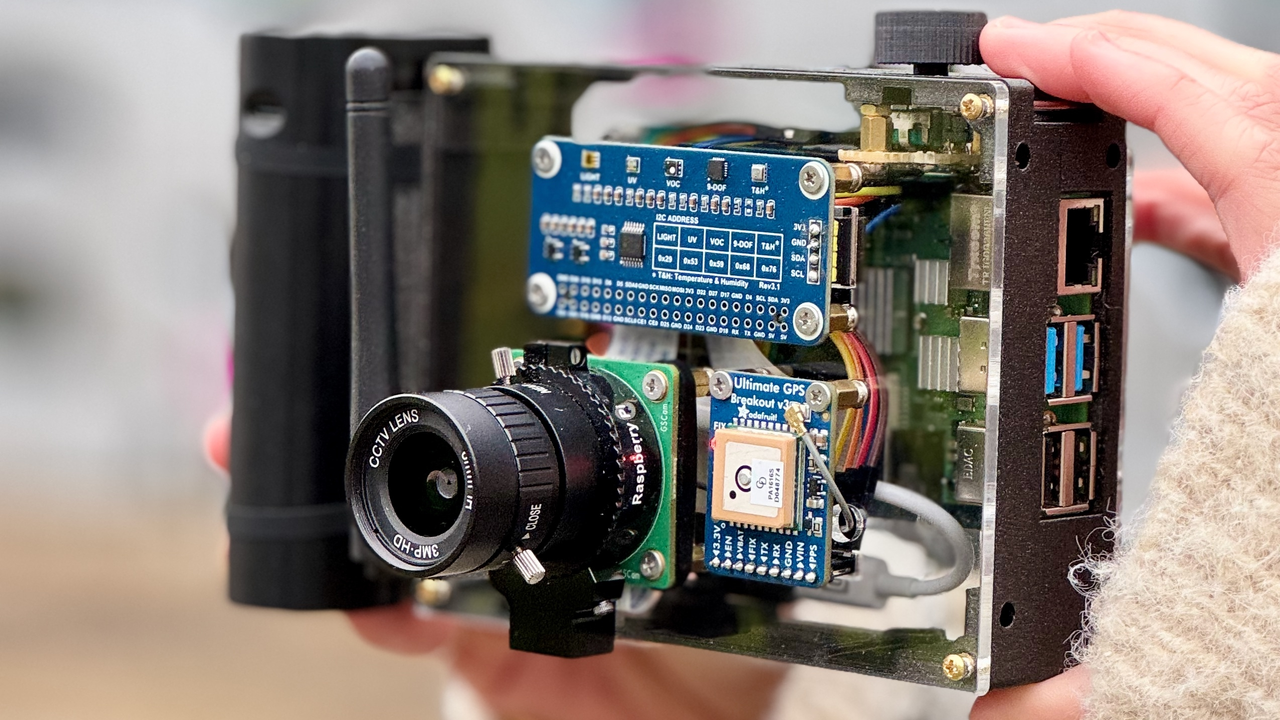

Multi-Modal Kamera

=> An AI-driven camera.

Project description

Products and services powered by artificial intelligence are rapidly introduced into our everyday life, yet its inner workings remain largely inaccessible. Multi-modal AI models, which combine multiple types of data, are no exception. Designed to be seamless, these systems obscure their processes, limiting public understanding and critical engagement. Therefore this project introduces a “seamful” approach, an AI-powered camera that intentionally reveals the model’s decision-making process. Built on an open-source platform using a Raspberry Pi and a self-hosted AI server, this device is able to capture images from your surroundings, while interacting with a Vision-Language Model to generate descriptive outputs. It allows users to ask questions, with the addition of different inputs, such as sensor data captured on site and a retrieval augmented generation system. By making these processes visible, the project aims to develop an intuitive understanding of how these models function while also encouraging reflection on their impact.

Gallery

Special Thanks:

Department of Design Investigations

Photographer: Leo Mühlfeld

Operating: Mia Tesic

Book Layouting: Nina Gstaltner

Book Binding: Lizzy Sharp

Exhibited at:

Upcoming: Angewandte Festival, 2025 (AT)